July 3 • 44 min read

Is Your Next Hire Real? Detecting AI-Generated Resumes and Augmenting the Modern Recruiter

Recruiters are facing an "applicant tsunami" as AI tools enable a massive surge in job applications, many of which are embellished or entirely fake.

Is Your Next Hire Real?

The talent acquisition landscape is facing a paradigm-shifting crisis driven by the proliferation of generative artificial intelligence (AI). Recruiters are no longer just managing high application volumes; they are contending with an "applicant tsunami" where a significant and growing percentage of submissions are authored, embellished, or entirely fabricated by AI tools.

The proliferation of generative AI has precipitated an existential crisis in talent acquisition. Traditional recruitment, built on a foundation of categorical thinking—sorting candidates into rigid bins of "qualified" or "unqualified" based on static resumes—is no longer viable. AI-powered tools are systematically overwhelming these legacy systems, creating a deluge of homogenized, often fraudulent, applications that bury authentic talent and introduce unprecedented security risks.

In this article, we provide an analysis of this challenge, examining its scale, impact, and potential solutions, while proposing a strategic framework for navigating this new reality.

The problem has evolved from a productivity challenge into a critical corporate security threat. The surge in applications, with platforms like LinkedIn reporting a 45% increase in submissions. [1] is compounded by the rise of completely fraudulent candidates. These are not merely embellished resumes but synthetic identities, often backed by deepfake technology and, in some cases, linked to state-sponsored actors seeking to infiltrate corporations for espionage or financial gain. [1] Projections indicate that by 2028, one in four job applicants globally could be fake, a testament to the industrial scale of this threat [3].

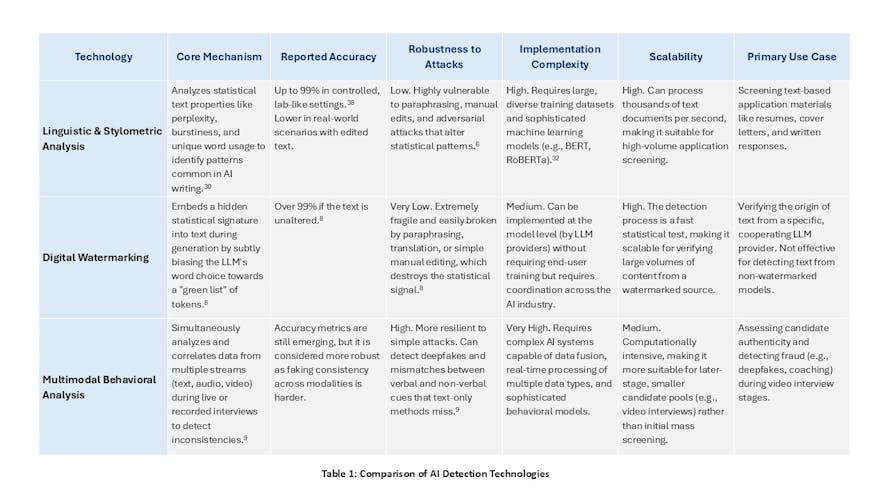

This deluge has a profound impact on the recruitment function. It has led to a collapse in the "signal-to-noise ratio," necessitating a strategic shift in the recruiter's role from proactive talent discovery to reactive fraud detection. The overwhelming volume of homogenized, AI-generated applications leads to recruiter burnout, erodes trust in the hiring process, and renders traditional efficiency metrics, such as "time-to-fill," dangerously misleading. [4] In response, a technological arms race has emerged, with organizations deploying AI to detect other AI systems. The following analysis examines the leading technological defenses—linguistic and stylometric analysis, digital watermarking, and multimodal behavioral analysis—as organizations seek to regain control of an increasingly automated applicant landscape. While models based on linguistic patterns can achieve high accuracy in controlled settings, they remain vulnerable to simple paraphrasing and adversarial attacks [6]. Digital watermarking, a promising concept for embedding hidden signatures in AI text, is currently too fragile for widespread, reliable use [8]. The most robust technological path forward lies in multimodal AI, which assesses candidates holistically across text, audio, and video, making deception significantly more difficult.[9]

But technology alone isn’t enough. The most effective strategy is a defense-in-depth approach—combining technical tools with stronger processes and human-centered verification. This includes multi-stage identity checks, advanced analysis of digital footprints, and assessments specifically designed to be difficult for AI to game.[2]

In essence, the AI resume crisis is a case study in the failure of industrial-age thinking in a digital-age world. The path forward is not to build better automated boxes, but to cultivate a more adaptive, nuanced, and resilient system of talent verification.

Ultimately, navigating this landscape requires a new operational philosophy. This article advocates for the adoption of the "Centaur Model" for recruitment, a human-AI collaborative framework that leverages technology to handle scale and repetitive tasks. At the same time, human recruiters are augmented and elevated to focus on uniquely human strengths: nuanced assessment, strategic judgment, relationship building, and final authenticity verification.[11] This model is the strategic antidote to the "AI vs. AI" arms race, creating an asymmetric advantage by leveraging human intelligence.

Looking ahead, the future of candidate assessment will shift decisively from evaluating a document (the resume) to verifying an identity and their claimed capabilities in real-time. The arms race will continue, demanding agile and adaptive hiring systems. For industry leaders, the strategic imperatives are clear: reframe application fraud as a security risk, invest in a layered defense that combines technology and process, champion the Centaur model, and launch a comprehensive upskilling program for the recruitment workforce to prepare them for this new era.

1. The New Frontline of Recruitment: The AI-Generated Applicant Tsunami

The widespread availability of powerful generative AI and Large Language Models (LLMs) has irrevocably altered the landscape of talent acquisition. What was once a manageable flow of applications has transformed into an overwhelming deluge, fundamentally challenging the established processes, tools, and philosophies of recruitment. This new environment is characterized not only by unprecedented volume but also by a crisis of authenticity, where the lines between genuine candidates, AI-embellished applicants, and entirely fabricated personas have become dangerously blurred. In this section, we delineate the scale and nature of this multifaceted problem, from the quantitative challenge of the "applicant tsunami" to the critical security threat posed by sophisticated, AI-driven fraud.

1.1. The Scale of the Deluge: Quantifying the "Applicant Tsunami"

The most immediate and palpable change for recruiters is the exponential increase in the number of job applications. This is not a gradual trend, but a sudden and massive surge that has been aptly described as an "applicant tsunami."[1] Data from professional networking platform LinkedIn reveals that the number of applications submitted has surged by over 45% in the past year alone, reaching a staggering average of 11,000 applications every minute. [1] This phenomenon is directly fueled by the accessibility of generative AI tools like ChatGPT, which empower job seekers to generate and tailor application materials with unprecedented speed and scale. [1]

The real-world impact of this surge is stark. A single job posting for a remote tech role requiring only three years of experience can now attract an astonishing volume of responses: 400 applications within 12 hours, 600 within a day, and over 1,200 before the listing is hastily taken down.[1] This is the new operational reality for hiring managers. The ability for candidates to automate their job search has reached an industrial scale; one account details a graduate student who used LLMs to submit 1,200 tailored applications within just a few weeks.

This behavior is becoming the norm rather than the exception. A Capterra survey found that 58% of job seekers are now leveraging AI in their search, and those who do can complete 41% more applications than their counterparts who do not use AI. [14] While these tools offer clear benefits to job seekers in terms of efficiency and formatting, they have collectively created an environment of "application oversaturation" for employers, overwhelming recruiters with a mountain of submissions and disrupting the traditional hiring process. [1]

1.2. The Homogenization of the Talent Pool: A Sea of Sameness

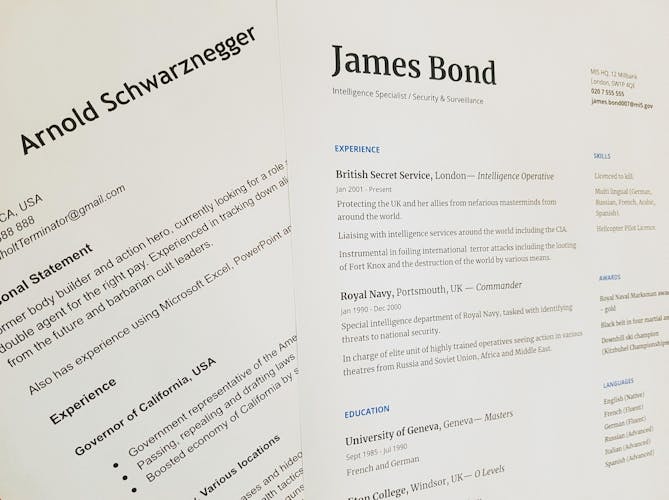

Beyond the sheer volume, a more insidious problem has emerged: the erosion of individuality and authenticity in the applicant pool. The very AI tools that promise to help a candidate "stand out" are, paradoxically, creating a flood of applications that are "suspiciously similar".[13] Recruiters are increasingly confronted with resumes and cover letters that, while polished and grammatically perfect, are filled with generic, impersonal language, an overuse of clichéd buzzwords, and highly repetitive sentence structures. [17] One recruiter on a professional forum bluntly stated that most AI-generated resumes are "garbage," noting that they may "look pretty" but lack the specific, substantive information required to justify an interview or advance a candidate in the process. [20]

This homogenization creates a significant new burden for talent acquisition professionals. Their task is no longer simply to identify the most qualified individuals, but to undertake the far more challenging task of distinguishing between candidates who possess genuine skills and experience and those who are merely adept at "gaming the system." [1] AI tools excel at optimizing documents for Applicant Tracking Systems (ATS) by stuffing them with keywords scraped from the job description. The result is a resume that may pass an initial automated screen but fails to reflect the candidate's actual abilities or tell a compelling, unique story. [4] It forces recruiters to sift through a sea of sameness, a tedious and inefficient process that makes identifying true talent more difficult than ever before.

1.3. Beyond Inauthenticity: The Rise of the Fabricated Candidate

The challenge has escalated far beyond resume embellishment to encompass outright and sophisticated fraud. The threat is no longer confined to inauthentic claims of proficiency but extends to the creation of entirely fabricated candidates. This new frontier of deception leverages a suite of AI technologies, including deepfake tools to alter appearances in video interviews, AI-generated code or creative portfolios to fake skills assessments, and completely synthetic social media profiles to build a veneer of a legitimate digital footprint. [2] A survey by Resume Genius found that 17% of hiring managers have encountered candidates using deepfake technology in video interviews, and 35% have come across AI-created portfolio work. [5]

This is not the work of isolated individuals, but rather it is becoming an industrialized operation. A deeply concerning trend is the involvement of state-sponsored actors. The U.S. Justice Department, for instance, has indicted individuals involved in a scheme to place North Korean nationals in remote IT roles at U.S. companies. [1] These operations employ sophisticated methods, including the use of stolen or AI-generated identities and deepfake technology, to infiltrate corporations with the ultimate goal of funneling funds back to their government's military and weapons programs.2 This development transforms what was once a human resources issue into a grave matter of corporate and national security. The risk is no longer simply the cost of a bad hire; it is the catastrophic potential of a security breach, data exfiltration, or the installation of malware by a malicious actor who has successfully infiltrated the organization through the hiring process.

The scale of this threat is staggering. The research and advisory firm Gartner has issued a stark warning, projecting that by the year 2028, a complete 25% of all job applicants globally could be fabricated, driven primarily by the power and accessibility of generative AI. [1] This forecast highlights the urgent need for organizations to acknowledge that the nature of the recruitment challenge has fundamentally shifted. The initial annoyance of receiving too many similar resumes has evolved into a systemic security vulnerability that demands a strategic, cross-functional response involving not just HR but also IT, legal, and corporate security leadership. This escalation is fueled by a vicious cycle of automation: candidates, feeling they are facing "cold automation" from employer ATS, turn to AI tools to compete, which in turn forces recruiters to adopt their own AI to manage the volume, creating a resource-draining arms race where both sides continuously escalate their technological efforts. [1]

There's a vicious cycle of automation: candidates, feeling they are facing "cold automation" from employer ATS, turn to AI tools to compete, which in turn forces recruiters to adopt their own AI to manage the volume, creating a resource-draining arms race where both sides continuously escalate their technological efforts

2. The Ripple Effect: Impact on Recruiter Performance and Credibility

The "applicant tsunami" and the crisis of authenticity are not abstract challenges; they have direct, tangible, and often detrimental consequences for the performance, efficiency, and credibility of professional recruiters and the talent acquisition function as a whole. The sheer scale and deceptive nature of AI-generated applications are straining traditional recruitment models to their breaking point, forcing a re-evaluation of roles, metrics, and the very definition of success in hiring.

2.1. The “Overload Effect”: Drowning in Data, Starving for Insight

The most immediate and debilitating impact on recruiters is the state of "application oversaturation". [4] Faced with hundreds or even thousands of applications for a single role, the task of manual review becomes impossible. This overwhelming volume inevitably slows down the entire recruitment lifecycle, paradoxically increasing the time it takes to find qualified candidates despite the automation involved. [4] The risk of high-quality, authentic candidates being lost in the deluge of AI-generated noise is exceptionally high.

This constant pressure and unmanageable workload lead directly to recruiter burnout, a critical issue that degrades the ability of talent acquisition teams to maintain high standards of candidate selection and engagement. [4] To cope with the volume, recruiters are often forced to rely more heavily on simplistic, automated filters or spend less time per application. This can result in a reliance on superficial keyword matching, which may inadvertently screen out highly qualified candidates whose resumes are not perfectly optimized for the ATS. [14] Consequently, organizations may find themselves settling for candidates who merely meet the minimum requirements on paper, rather than identifying and hiring those who are truly exceptional and could drive significant value. [4]

This dynamic represents a fundamental collapse of the "signal-to-noise ratio" in recruitment. Historically, a recruiter's expertise lies in their ability to detect the "signal" of top talent within the "noise" of unqualified applicants. The AI-driven tsunami has amplified the noise to an unmanageable degree while simultaneously corrupting the signal, as many seemingly strong applications are either inauthentic or entirely fraudulent. [21] As a result, the primary function of the recruiter is undergoing a forced evolution, shifting away from the strategic, high-value work of proactive talent discovery and toward the reactive, operational task of fraud detection and verification. There is a significant and less strategic redefinition of the role, moving it from a talent partner to a gatekeeper.

2.2. The Erosion of Trust and Authenticity

The core value proposition of a professional recruiter is their ability to assess human qualities: skill, experience, motivation, and, most importantly, authenticity. The proliferation of AI-generated content strikes at the very heart of this function. A 2023 survey by Resume Genius found that an alarming 76% of hiring managers believe that AI makes it more difficult to assess whether a candidate is being authentic. [5] This growing uncertainty creates a trust deficit that undermines the recruiter's credibility with the hiring managers and business leaders they serve. When a recruiter presents a candidate, there is now an implicit question of whether they are presenting a real person or a well-crafted AI persona.

The stakes are incredibly high. When a fraudulent candidate successfully navigates the screening process and is hired, the consequences can be profound. These range from the direct financial costs of a bad hire to severe legal liabilities, the exposure of sensitive corporate data, and lasting damage to the company's reputation. [2] Each such failure further erodes the credibility of the recruitment team responsible for it. An AI-generated resume was named by 53% of recruiters as one of the biggest red flags indicating an applicant is unsuitable. Yet, the ability to reliably detect them remains a major challenge. [17] It places recruiters in a difficult position, where they are held accountable for verifying authenticity in an environment designed to obscure it.

2.3. The AI vs. AI Paradox and the Dehumanization of Hiring

In an effort to combat the flood of AI-generated applications, many organizations are deploying their own AI tools for screening and assessment.[13] This has given rise to what industry expert Hung Lee terms an "AI versus AI type of situation," a technological arms race between candidates and employers.[1] Candidates, often frustrated by their perception of interacting with impersonal bots and automated rejection emails from company ATS, feel compelled to use their own AI tools simply to level the playing field. [1] It, in turn, generates more AI content, which necessitates more sophisticated detection tools from employers, creating a self-perpetuating cycle of escalating automation. [1]

This paradox has a corrosive effect on the hiring process, stripping it of its essential human element. Recruiting, at its best, is a "human contact sport." It relies on building relationships, understanding nuanced motivations, and fostering a positive candidate experience. The AI arms race pushes the process in the opposite direction, transforming it into a technical battle of optimization and detection. [27] This not only alienates genuine, highly qualified candidates who may be put off by the impersonal and automated nature of the interaction but also degrades the overall quality of the candidate experience.

This trend also raises questions about the validity of traditional recruitment metrics. For example, a company like Chipotle can boast of reducing its hiring time by 75% through the use of an AI chatbot named "Ava Cado". [1] On a performance dashboard, this appears to be a massive success. However, in an environment where Gartner predicts one in four applicants could be fraudulent, optimizing purely for speed and efficiency becomes a high-risk strategy. [3] A fast, inexpensive hire who turns out to be a security threat represents a catastrophic negative return on investment. This suggests that organizations must urgently re-evaluate their core recruitment KPIs. A myopic focus on metrics like "time-to-fill" or "cost-per-hire" is no longer sufficient and may, in fact, be dangerous. Future-proof recruitment scorecards must incorporate and prioritize new metrics that measure authenticity, the rigor of verification, and the long-term quality of hire, even if it comes at the expense of some of the speed promised by full automation.

3. Countermeasures: A Multi-Pronged Approach to Detection and Verification

The escalating challenge of AI-generated applications and candidate fraud necessitates a sophisticated, multi-layered response. Relying on a single tool or technique is insufficient in the face of an evolving threat landscape. The most effective strategies will combine advanced technological defenses with a fundamental reinforcement of procedural and human-centric vetting processes. Let’s examine the available countermeasures, categorizing them into two critical areas: technological solutions that utilize AI to counter AI, and procedural fortifications that revamp the hiring process for an era of deep uncertainty.

3.1. Technological Defenses: Using AI to Fight AI

As candidates increasingly leverage AI to create application materials, organizations are turning to a new generation of AI-powered tools designed to detect these submissions. These technologies operate on different principles, each with its own strengths and weaknesses.

3.1.1. Linguistic and Stylometric Analysis: The AI's Fingerprint

This is currently the most mature and widely deployed method for detecting AI-generated text. The core principle involves training machine learning models on vast datasets containing both human-written and AI-generated content. By analyzing these datasets, the models learn to recognize the subtle statistical "fingerprints" that differentiate machine text from human writing. [28] The need for such tools is acute; research shows that unaided humans can distinguish between AI-generated text and human writing with only about 53% accuracy, which is barely better than random chance. [31]

These detection models analyze text across several key dimensions:

● Perplexity: This metric measures the predictability of a text. LLMs are trained to predict the next most probable word in a sequence. As a result, the text they generate is often highly predictable and thus has a low perplexity score. Human writing, in contrast, is filled with more varied and less predictable word choices, resulting in a high perplexity score. A low perplexity score is a strong indicator of AI authorship. [32]

● Burstiness: It refers to the variation in sentence length and structure. Human writing naturally has a varied rhythm, with a mix of long, complex sentences and short, punchy ones. This results in high burstiness. AI-generated text, on the other hand, tends to be more uniform in its sentence construction, resulting in lower burstiness. [30]

While early detectors relied on these basic metrics, more advanced models have significantly improved accuracy. Transformer-based architectures, such as BERT (Bidirectional Encoder Representations from Transformers), can achieve accuracy rates of 93-99% in controlled tests. Unlike older models, BERT analyzes text bidirectionally, enabling it to understand the context of words and phrases, rather than just matching keywords, making it significantly more powerful. [32]

Even more specialized models are emerging. StyloAI, for example, is a model that uses a comprehensive suite of 31 specific stylometric features to create a highly detailed "fingerprint" of a text's writing style. These features include lexical measures (e.g., Type-Token Ratio, Hapax Legomenon Rate), syntactic measures (e.g., Complex Verb Count, Average Sentence Length), and sentiment measures. By analyzing this rich feature set, StyloAI has demonstrated up to 98% accuracy on specific datasets. [39]

Despite these advances, linguistic analysis is not a panacea. The primary weakness of these models is their vulnerability to "adversarial attacks." A candidate can use an AI to generate a resume and then make minor manual edits or run it through a paraphrasing tool. These simple revisions can often be enough to alter the statistical properties of the text and fool the detector. [6] Furthermore, these models can exhibit bias, particularly against non-native English speakers, whose natural writing patterns might be misclassified as AI-generated, leading to false positives and unfair outcomes. [7] It creates a perpetual "cat and mouse" game, where detection models must constantly be retrained to keep up with the evolving capabilities of generative models and the methods used to evade detection. [31]

3.1.2. Digital Watermarking: The Hidden Signature

A more proactive approach to detection is digital watermarking. The goal is to embed a hidden, statistically significant signature into LLM-generated text at the moment of its creation. [8] This technique does not alter the meaning of the text and is invisible to the human eye. The most common method, proposed by researchers at the University of Maryland, involves partitioning the LLM's vocabulary into a "green list" and a "red list" of tokens (words or parts of words) based on a secret key. [8] During text generation, the model subtly biases its output to favor tokens from the green list, causing them to appear slightly more frequently than they would by chance. A detection algorithm can then analyze a piece of text and, using the same secret key, check for this unnaturally high frequency of green-listed words. A high z-score, which measures this statistical deviation, serves as a strong signal of AI authorship. [8]

This technology has garnered significant attention, with calls for its implementation included in governmental directives, such as President Biden's Executive Order on AI. [42] However, in its current state, text watermarking is a fragile solution with significant vulnerabilities. [43] The most critical weakness is paraphrasing. Suppose a user takes a watermarked piece of text and runs it through a different LLM or even a simple paraphrasing tool. In that case, the original token distribution is destroyed, and the watermark is effectively erased. Studies have shown that this can cause detection accuracy to plummet from over 99% to below 5% [8] Other challenges include the risk of "spoofing" attacks, where a malicious actor could learn to mimic the statistical patterns of a watermark, and the fundamental fact that text is inherently malleable and easy to edit, making it far more difficult to embed a truly permanent and immutable signature compared to images or audio files. [42]

3.1.3. Multimodal Behavioral Analysis: Beyond the Text

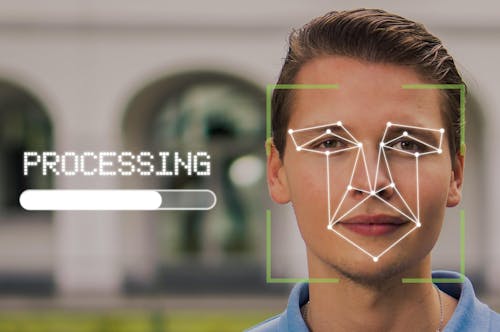

The next frontier in AI-driven detection moves beyond analyzing static text to assessing candidates holistically and dynamically. Multimodal AI systems are designed to process and correlate information from multiple data streams—or modalities—simultaneously. In a recruitment context, this means analyzing a candidate's video interview by integrating their spoken words (text), their vocal patterns (audio, including tone, pitch, and cadence), and their non-verbal cues (video, including facial expressions, posture, and eye movements). [9]

This approach offers a far more robust defense against fraud. It is significantly more difficult for a candidate to fake consistency across multiple modalities than it is to generate a polished resume. These systems can perform "cross-modal validation," flagging inconsistencies that may signal deception, such as a mismatch between the confident language a candidate uses and nervous nonverbal behaviors. [9] Multimodal analysis is also a powerful tool for detecting deepfakes. AI can be trained to identify the subtle visual artifacts often present in manipulated videos, such as unnatural blinking patterns, unusual blurring at the edges of the face, or audio that is not perfectly synchronized with lip movements. [46] By creating a richer, more comprehensive picture of the candidate, multimodal AI represents a significant leap forward in the ability to verify authenticity.

3.2. Procedural Fortification: Rebuilding the Vetting Process

Technological solutions, while powerful, are only one part of the equation. Their inherent limitations necessitate a fundamental redesign of the recruitment process itself. Organizations must move toward a model of continuous verification, building a layered defense that is more resilient to fraud.

3.2.1. The Multi-Stage Verification Funnel: A Layered Defense

The traditional model of conducting a single background check at the end of the hiring process is no longer adequate. In an environment rife with fraud, organizations must adopt a continuous, multi-stage verification funnel that integrates checkpoints throughout the entire candidate journey. [10] This "defense-in-depth" strategy creates multiple opportunities to catch inconsistencies and fraudulent activity. A robust funnel would include:

● Application Stage: Initial, low-friction, passive verification of core data points like email, phone number, and address risk scores. [48]

● Screening Stage: Conducting an active cross-check of a candidate's claimed history against their public digital footprint, including LinkedIn profiles and other professional sites, to identify inconsistencies. [2]

● Interview Stage: Live identity verification and the use of behavioral questioning designed to probe for authenticity. [49]

● Offer & Onboarding Stage: Final, rigorous checks, including potential in-person document verification and strict IT onboarding protocols for remote hires. [2]

This layered approach ensures that even if a fraudulent application passes one checkpoint, it is likely to be caught at a subsequent, more rigorous stage.

3.2.2. Advanced Identity and Credential Verification: Proving You Are You

The assumption that the identity provided on an application is real can no longer be taken for granted. [48] Modern verification must go beyond traditional background checks to actively prove identity. Best practices now include a combination of methods:

● Biometric Identity Verification: Partnering with specialized services like Socure or CLEAR, which are becoming integrated into hiring platforms like Greenhouse.46 These services typically require a candidate to upload a photo of a government-issued ID and then take a live selfie. The system utilizes facial recognition to match the two and liveness detection to verify that the selfie is from a live person, not a photo or a deepfake video. [51]

● Digital Footprint Scrutiny: Training recruiters to conduct a thorough analysis of a candidate's online presence. Key red flags include LinkedIn profiles that were created recently, have few connections, show no activity, or contain details that are inconsistent with the resume. [2] A reverse image search on a profile picture can also reveal if it is a stock photo or has been stolen from another source. [10]

● Device and Network Intelligence: During remote interviews or assessments, capturing and analyzing device metadata can provide crucial clues. A mismatch between a candidate's stated location and the geolocation of their IP address, or the use of a VPN to mask their location, are significant red flags that warrant further investigation. [49]

3.2.3. The Un-fakable Assessment: Designing for Authenticity

Perhaps the most powerful procedural defense is to design assessments that are inherently difficult for an AI to complete or for a human to cheat on using AI assistance. The focus should shift from questions that test rote knowledge to tasks that require genuine, real-time cognitive skill. It includes:

● Live, Interactive Skills Assessments: Instead of take-home assignments, which an AI or another person can complete, assessments should be conducted live and proctored. For technical roles, this could be a live coding challenge or a system design problem where the candidate must not only produce a solution but also articulate their thought process and defend their choices in real-time to an interviewer. [53]

● AI-Aware Behavioral Interviewing: Interview questions must be designed to thwart scripted, AI-generated answers. This means moving away from standard questions and toward open-ended, multi-part situational problems that require contextual reasoning, creativity, and spontaneous thinking. [10] Excellent techniques include asking candidates to "teach" a complex concept they claim to know or to choose between two viable options and defend their reasoning. These types of questions reveal an accurate understanding versus memorized responses. [10]

● Physical Interaction Probes: A simple yet effective tactic during video interviews is to ask the candidate to perform a physical action that can disrupt many deepfake or video filter technologies. Asking a candidate to wave their hand in front of their face briefly, turn their head from side to side, or even stand up can cause the AI overlay to glitch or reveal artifacts, exposing the deception. [2]

Ultimately, no single technology can serve as a "magic bullet." The vulnerabilities inherent in linguistic analysis and watermarking demonstrate that a purely technological solution is a flawed strategy. Procedural fortifications are not merely supplementary; they are a necessary component of a resilient hiring system. The most effective approach is a "defense-in-depth" strategy where each layer—technological screening, human review, skills assessment, and identity verification—is designed to compensate for the weaknesses of the others and catch failures from the preceding stage. This integrated approach is the only viable path forward. Furthermore, companies must address the policy vacuum surrounding what constitutes "acceptable AI use." Without clear, publicly communicated guidelines on what is permissible (e.g., grammar correction) versus what is fraudulent (e.g., using AI for interview answers), organizations lack a firm basis for disqualifying candidates and open themselves to claims of unfairness. [15]

4. The Centaur Recruiter: Augmenting Human Expertise in the AI Era

The rise of AI in recruitment presents a fundamental choice for organizations: to pursue full automation, relegating humans to a secondary role, or to forge a new model of human-AI collaboration. The challenges of fraud, inauthenticity, and the sheer volume of data make a purely automated approach untenable and risky. The strategic path forward lies not in replacing human recruiters but in augmenting their capabilities. By adapting the "Centaur" concept from military strategy and game theory, talent acquisition leaders can design a superior operating model that leverages the best of both human and machine intelligence to navigate the complexities of the modern hiring landscape.

4.1. Introducing the Centaur Model for Talent Acquisition

The concept of "Centaur warfighting," as articulated by security expert Paul Scharre, describes a hybrid intelligence system in which a human and a machine work in close partnership, much like the mythical creature that combines the torso of a human with the body of a horse. [11] In this model, each partner contributes its unique strengths—the machine provides speed, power, and data-processing capacity, while the human provides strategy, judgment, and ethical oversight. This collaboration yields performance that is superior to what either agent could achieve independently. [11]

Applying this framework to talent acquisition, we can define the "Centaur Recruiter" as a human expert who wields AI as a powerful tool. The AI handles the immense scale of data processing and automates repetitive tasks. At the same time, the human recruiter retains strategic control, exercises critical judgment, and manages the uniquely human aspects of the hiring process.

This stands in stark contrast to the "Minotaur" model, where the human is effectively subordinated to the machine, reduced to being "the hands of the machine" and merely executing tasks dictated by an algorithm. [11] A Minotaur recruiter, for example, would be one who blindly accepts the ranked list of candidates produced by an AI screening tool without critical review or independent verification. In the high-stakes, nuanced, and legally sensitive domain of hiring, where assessing authenticity and potential is paramount, the simplistic Minotaur approach is not only suboptimal but also dangerously prone to error, bias, and costly failures. The requirement for a Centaur model, where human control and judgment are central, is therefore unavoidable.

4.2. Delineating Roles: Where AI Excels and Where Humans are Irreplaceable

A successful Centaur model requires a clear and deliberate allocation of tasks based on the distinct capabilities of both the AI and the human recruiter. This division of labor is not about minimizing human involvement but about focusing it on the highest-value activities where human intelligence is indispensable.

AI's Role (The Horse - Speed, Power, and Scale):

● High-Volume Sourcing and Screening: The AI's primary role is to tackle the "applicant tsunami." It can parse and analyze millions of data points from resumes, cover letters, and online profiles at a scale and speed no human team could ever match. [27] It includes performing initial keyword and skills matching to generate a first-pass shortlist of potentially relevant candidates. [56]

● Automation of Repetitive Tasks: AI is ideally suited to handle the administrative burdens of recruitment, such as scheduling interviews, sending automated follow-up communications, and performing initial data entry into the ATS. It frees up significant recruiter time. [14]

● Initial Fraud and Authenticity Flagging: AI detection tools can serve as the first line of defense, running linguistic and stylometric analyses on all incoming applications to flag resumes with a high probability of being AI-generated or containing inconsistencies. These flagged applications are then escalated for mandatory human review. [21]

Human's Role (The Rider - Strategy, Judgment, and Connection):

● Nuanced Assessment and Contextual Judgment: While AI can match skills listed on a page, it cannot assess the critical "intangibles" that determine long-term success. The human recruiter is irreplaceable for evaluating cultural fit, personality, communication style, emotional intelligence, and a candidate's potential for growth.[12]

● Authenticity Verification and Investigation: The human recruiter serves as the final arbiter of authenticity. It involves conducting the probing, AI-aware behavioral interviews designed to get past scripted answers, scrutinizing a candidate's digital footprint, and making the final judgment call when technological signals are ambiguous or contradictory. [53]

● Strategic Decision-Making and Ethical Oversight: The human must interpret the data provided by AI systems, identify and correct for potential algorithmic biases, and have the authority to override flawed recommendations. The final, context-aware hiring decision must always rest with a human. [58]

● Relationship Building and Candidate Experience: Recruiting remains a fundamentally human endeavor. A human recruiter builds trust and rapport with candidates, acts as a career coach and guide through the hiring process, and provides a personalized experience. This human connection is crucial for attracting top talent, maintaining a positive employer brand, and enhancing offer acceptance rates. [12]

4.3. Achieving Superior Performance through Hybrid Intelligence

The Centaur model is not a compromise between automation and human touch; it is a strategically superior operating model that creates a whole greater than the sum of its parts. This hybrid intelligence framework directly addresses the core challenges of the current recruitment environment. By delegating the overwhelming volume of low-level screening to AI, the model frees up the limited time and cognitive energy of human recruiters, allowing them to focus exclusively on high-value tasks such as quality assessment, authenticity verification, and strategic engagement.4

This model also creates a system of checks and balances that mitigates the weaknesses inherent in each component. The known vulnerabilities of AI—its lack of nuance, its potential for bias, and its inability to understand deep context—are checked and corrected by human oversight and final judgment. [58] Conversely, the limitations of humans—their cognitive biases, susceptibility to fatigue when faced with repetitive tasks, and inability to process data at scale—are supported and augmented by the AI's speed, consistency, and data-processing power. This collaborative approach breaks the symmetry of the "AI vs. AI arms race." Instead of simply fighting AI with more AI (the Minotaur approach), the Centaur model introduces a more powerful, asymmetric advantage: the combination of machine scale and human strategic intelligence. It effectively changes the game from "my bot versus your bot" to "my human-machine team versus your bot."

4.4. Training the Modern Centaur: Developing New Competencies

Implementing a successful Centaur model is not merely a matter of purchasing new software; it requires a fundamental upskilling and reskilling of the recruitment workforce. The role of the recruiter is evolving from a sourcer and interviewer to a sophisticated operator of a human-machine system. This necessitates the development of a new set of core competencies:

● Data Literacy: Recruiters must be trained to understand, interpret, and critically question the outputs of AI systems. They need to understand what the data means, how it was generated, and its limitations, rather than blindly accepting algorithmic recommendations. [58]

● AI Systems Thinking: A functional, practical understanding of how different AI tools in their stack—such as detection models, resume parsers, chatbots, and scheduling tools—actually work is essential. It includes knowing their specific strengths, weaknesses, and potential failure points. [59]

● Ethical and Legal Acumen: With regulations such as the EU AI Act classifying HR as a "high-risk" use case, recruiters require a solid understanding of the ethical implications and legal risks associated with using AI in the hiring process. It includes understanding how to identify and mitigate bias to ensure compliance with anti-discrimination laws. [1]

● Advanced Investigative and Verification Skills: As the final line of defense against sophisticated fraud, recruiters must become proficient in new investigative techniques, including digital footprint analysis, device intelligence interpretation, and the art of conducting AI-aware behavioral interviews that can penetrate digital facades. [2]

The shift to a Centaur model requires a corresponding shift in organizational investment. The focus can no longer be solely on procuring tools. The larger and more critical investment must be in the talent and training required to create a new generation of Centaur Recruiters. The ultimate ROI comes not from the technology alone, but from the enhanced capability and superior performance of the integrated human-machine unit.

The strategic exit from this self-perpetuating arms race isn't a more sophisticated bot-trap; it's a fundamental change in the game itself. The ultimate defense against the machine isn't a better machine—it's a more discerning, augmented human. Instead of trying to out-build the bots, the winning move is to leverage them for scale and then sidestep them with the one thing they can't replicate: genuine human judgment. After all, the goal was never to catch a robot pretending to be a project manager; it's to find a human who can actually manage the project—a nuanced task that, for now, remains stubbornly outside the grasp of any large language model

5. The Road Ahead: Future Directions in Candidate Verification and Assessment

The collision of generative AI and talent acquisition is not a momentary disruption but the beginning of a new, enduring reality. The "arms race" between AI-driven application generation and detection will continue to escalate, demanding that organizations build agile, resilient, and forward-looking hiring systems. The future of candidate assessment will be defined by a fundamental shift away from static, text-based documents and toward dynamic, multi-faceted verification of identity and capability. Let’s synthesize our analysis's findings to provide a view of the road ahead, highlighting emerging technologies and offering strategic recommendations for industry leaders.

5.1. The Next Generation of Detection and Verification

As the current generation of detection tools faces challenges in terms of robustness and evasion, the market is already moving toward more sophisticated and integrated solutions. Three key trends will shape the future of candidate verification:

● The Ascendance of Multimodal AI: The future of reliable candidate assessment will inevitably move beyond the resume. The adoption of multimodal AI platforms will become more widespread, as they offer a far more holistic and difficult-to-fake method of evaluation. By combining and analyzing data from text (such as resumes and written answers), video (including facial expressions and non-verbal cues), and audio (including vocal tone and sentiment) simultaneously, these systems can create a comprehensive candidate profile. [9] This approach fundamentally raises the bar for deception, as a fraudulent candidate must maintain consistency not just in their words, but across all behavioral modalities.

● Instruction-Tuned Embeddings for Advanced Skills Verification: The field of Natural Language Processing (NLP) is rapidly advancing beyond simple keyword matching. A cutting-edge technique known as instruction-tuned embeddings holds significant promise for verifying more nuanced skills. Models like INSTRUCTOR can be prompted to generate text embeddings that are specifically tailored to a given instruction, such as "Represent this project description to highlight evidence of senior-level software architecture skills". [61] This enables a more context-aware analysis of a candidate's experience, which is far more sophisticated than searching for keywords like "Java" or "Python." This could lead to a new generation of assessment tools that are more accurate and significantly harder to spoof with generic, AI-generated content. [63]

● The Rise of Integrated Identity-First Platforms: The market will see a convergence of previously separate systems. Standalone AI detection tools will increasingly become embedded features within larger platforms. We are already seeing this with hiring platforms like Greenhouse partnering with identity verification firms like CLEAR to create integrated solutions such as "Greenhouse Real Talent". [46] The goal of these platforms is to link every application to a verified, real-world identity from the very beginning of the process. This represents a paradigm shift: the "source of truth" moves from the application document to a live, continuously validated digital identity. This market evolution suggests that AI detection and identity verification will cease to be optional add-ons. It will instead become a standard, non-negotiable feature of modern Applicant Tracking Systems (ATS) and Human Capital Management (HCM) suites.

5.2. The Evolving Arms Race and the Imperative for Agility

The "cat and mouse" game between AI generation and AI detection is not a temporary state; it is a permanent feature of the new landscape. [31] As detection models become more sophisticated, they will be met by a new generation of AI-powered obfuscation tools. Services are already emerging that are explicitly designed to "humanize" AI-generated text by introducing variations in perplexity and burstiness to evade detection. [35] Similarly, deepfake technology will continue to improve, becoming increasingly realistic and more challenging to detect. [22]

This dynamic reality means that any static solution or one-time technology purchase is doomed to fail. The core strategic imperative for organizations is not to find a single perfect tool, but to build an agile and resilient hiring system. This system must be designed for continuous adaptation, integrating technology, processes, and people in a way that allows for rapid updates in response to new threats. [47] This requires ongoing investment in technology, continuous training for recruiters, and a culture of vigilance and constant process improvement.

5.3. Summary and Strategic Recommendations

The proliferation of generative AI has transformed a long-standing recruitment challenge—high application volume—into a critical corporate security threat. The rise of the AI-generated and fully fabricated candidate has rendered traditional, document-centric hiring processes obsolete and dangerously vulnerable. Standalone technological solutions, while improving, are not a panacea, as they are locked in a perpetual and escalating arms race with generative technologies.

The most effective and sustainable path forward is a strategic re-imagining of the recruitment function, built on a layered, "defense-in-depth" strategy and centered on the "Centaur Recruiter" model of human-AI collaboration. To navigate this new terrain successfully, HR and business leaders should prioritize the following actionable recommendations:

1. Reframe the problem as a security risk: Immediately cease viewing application fraud as a minor HR issue. Reclassify it as a significant corporate security risk and establish a cross-functional task force that includes leadership from Talent Acquisition, IT, Cybersecurity, and Legal to develop a unified organizational strategy.

2. Invest in a layered, "defense-in-depth" framework: Reject the search for a single "magic bullet" technology. Instead, invest in and implement a multi-layered defense that combines technology with process. This should include a suite of tools for linguistic analysis and identity verification, but they must be integrated into a redesigned, multi-stage procedural funnel that includes rigorous human checkpoints at every stage.

3. Champion the Centaur Model: Adopt the Centaur model formally as the guiding philosophy for the talent acquisition function. Explicitly reject the "Minotaur" approach of full automation for high-stakes hiring decisions. Redesign recruitment workflows to augment, not replace, human recruiters, ensuring that human capital is focused on the highest-value, uniquely human tasks of strategic assessment, relationship building, and final verification.

4. Launch a "Centaur Recruiter" upskilling initiative: The single most critical investment is not in software, but in people. Immediately design and launch a comprehensive training and development program for the entire recruitment team. This curriculum must build new core competencies in data literacy, AI systems thinking, ethical and legal compliance, and advanced investigative techniques for fraud detection.

5. Establish and enforce clear AI use policies: Address the current policy vacuum by developing and clearly communicating a public-facing policy on the acceptable use of AI by candidates in the application process. This removes ambiguity, sets clear expectations, and creates a fair and defensible basis for disqualifying candidates who engage in fraudulent or deceptive practices.

The strategic exit from this self-perpetuating arms race isn't a more sophisticated bot-trap; it's a fundamental change in the game itself. The ultimate defense against the machine isn't a better machine—it's a more discerning, augmented human. Instead of trying to out-build the bots, the winning move is to leverage them for scale and then sidestep them with the one thing they can't replicate: genuine human judgment. After all, the goal was never to catch a robot pretending to be a project manager; it's to find a human who can actually manage the project—a nuanced task that, for now, remains stubbornly outside the grasp of any large language model

References

1. Automated resumes flood US job market as AI drives 11,000 applications per minute, accessed July 1, 2025, https://timesofindia.indiatimes.com/education/news/automated-resumes-flood-us-job-market-as-ai-drives-11000-applications-per-minute/articleshow/122112552.cms

2. AI and the Rise of Resume Fraud - NPAworldwide, accessed July 1, 2025, https://npaworldwide.com/blog/2025/05/16/ai-and-the-rise-of-resume-fraud/

3. The AI impostor: How fake job candidates are infiltrating companies - WorkLife.news, accessed July 1, 2025, https://www.worklife.news/technology/the-ai-impostor-how-fake-job-candidates-are-infiltrating-companies/

4. How AI-Generated Resumes Impact the Staffing Industry, accessed July 1, 2025, https://info.recruitics.com/blog/ai-generated-resumes-the-staffing-industry

5. AI's Impact on Hiring in 2025 - Resume Genius Survey, accessed July 1, 2025, https://resumegenius.com/blog/job-hunting/ai-impact-on-hiring

6. DetectRL: Benchmarking LLM-Generated Text Detection in Real-World Scenarios - arXiv, accessed July 1, 2025, https://arxiv.org/abs/2410.23746

7. AI Content Detectors: Protecting Against Plagiarism in 2024 - Perplexity, accessed July 1, 2025, https://www.perplexity.ai/page/ai-content-detectors-protectin-6nXsQQZ2SPaPp2JscRGxKw

8. Marked by the Machine: Exploring Watermarks in LLMs | by Rithesh ..., accessed July 1, 2025, https://medium.com/@rithesh18.k/marked-by-the-machine-exploring-watermarks-in-llms-d22fe3d8ef77

9. The Role of Multi-Modal AI Agents for Candidate Screening in Modern Recruitment, accessed July 1, 2025, https://www.maayu.ai/candidate-screening-ai-agent/

10. Advanced Methods for Identifying Fraudulent Candidates in Life Sciences Hiring |, accessed July 1, 2025, https://www.warmanobrien.com/resources/blog/identifying-fraudulent-candidates-in-life-sciences-industry-hiring--best-practices-for-hr-and-decision-makers/

11. Strategic Centaurs: Harnessing Hybrid Intelligence for the Speed of ..., accessed July 1, 2025, https://mwi.westpoint.edu/strategic-centaurs-harnessing-hybrid-intelligence-for-the-speed-of-ai-enabled-war/

12. AI vs. Human Recruiters: The Benefits of a Personalized Hiring Experience, accessed July 1, 2025, https://burnettspecialists.com/blog/ai-vs-human-recruiters-the-benefits-of-a-personalized-hiring-experience/

13. Flood of AI-generated resumes causes chaos for recruiters, who resort to AI to screen them, accessed July 1, 2025, https://mashable.com/article/ai-generated-resumes-overwhelming-recruiters

14. Challenges Faced by Job Boards and the Impact of AI, accessed July 1, 2025, https://info.recruitics.com/blog/challenges-faced-by-job-boards-and-the-impact-of-ai

15. AI and Job Seekers: Navigating the Challenges of Deception and Effective Recruitment - HRTech Edge | HR Technology News, Interviews & Insights, accessed July 1, 2025, https://hrtechedge.com/ai-and-job-seekers-navigating-the-challenges-of-deception-and-effective-recruitment/

16. The Truth About AI-Generated Resumes - TestGorilla, accessed July 1, 2025, https://www.testgorilla.com/blog/ai-generated-resumes/

17. Recruiters 'Overwhelmed' by Number of AI-Generated Applications - Saville Assessment, accessed July 1, 2025, https://www.savilleassessment.com.au/resources/articles-white-papers/recruiters-overwhelmed-by-number-of-ai-generated-applications/

18. Why AI Resume Builders Hurt Tech Job Seekers | Built In, accessed July 1, 2025, https://builtin.com/articles/risks-ai-resume-builders

19. Was This Written by AI? How to Spot It In Resumes, Cover Letters & Job Posts, accessed July 1, 2025, https://www.resume-now.com/job-resources/resumes/was-this-written-by-ai

20. Any recruiter here can tell us the reality about AI resumes? - Reddit, accessed July 1, 2025, https://www.reddit.com/r/resumes/comments/1iliyv0/any_recruiter_here_can_tell_us_the_reality_about/

21. AI-Generated Resumes in Recruitment: How AI Recruitment Platforms Improve Screening Efficiency - impress.ai, accessed July 1, 2025, https://impress.ai/blogs/ai-generated-resumes-in-recruitment-how-ai-recruitment-platforms-improve-screening-efficiency/

22. The Rise of Fake Artificial Intelligence Job Applicants - The National Law Review, accessed July 1, 2025, https://natlawreview.com/article/ai-deepfakes-and-rise-fake-applicant-what-employers-need-know

23. Fake job seekers are flooding the market, thanks to AI - CBS News, accessed July 1, 2025, https://www.cbsnews.com/news/fake-job-seekers-flooding-market-artificial-intelligence/

24. Fighting the rise of fake candidates | Metaview Blog, accessed July 1, 2025, https://www.metaview.ai/resources/blog/fighting-the-rise-of-fake-candidates

25. Employers Are Buried in AI Generated Résumés - Career Group Companies, accessed July 1, 2025, https://www.careergroupcompanies.com/press-awards/employers-buried-in-ai-resumes

26. info.recruitics.com, accessed July 1, 2025, https://info.recruitics.com/blog/ai-generated-resumes-the-staffing-industry#:~:text=AI%2Dgenerated%20resumes%20create%20challenges,and%20hire%20quality%20candidates%20confidently.

27. AI in recruitment: 3 key challenges solved | Eagle Hill Consulting, accessed July 1, 2025, https://www.eaglehillconsulting.com/insights/ai-in-recruitment/

28. How AI Content Detectors Work To Spot AI - GPTZero, accessed July 1, 2025, https://gptzero.me/news/how-ai-detectors-work/

29. How Do AI Detectors Work? - AutoGPT, accessed July 1, 2025, https://autogpt.net/do-ai-detectors-really-work-an-in-depth-look/

30. How Do AI Detectors Work? | Techniques & Accuracy - QuillBot, accessed July 1, 2025, https://quillbot.com/blog/ai-writing-tools/how-do-ai-detectors-work/

31. Q&A: The increasing difficulty of detecting AI- versus human-generated text - Penn State, accessed July 1, 2025, https://www.psu.edu/news/information-sciences-and-technology/story/qa-increasing-difficulty-detecting-ai-versus-human

32. How NLP Powers AI-Generated Text Detection | The AI Journal, accessed July 1, 2025, https://aijourn.com/how-nlp-powers-ai-generated-text-detection/

33. 4 Ways AI Content Detectors Work To Spot AI - Surfer SEO, accessed July 1, 2025, https://surferseo.com/blog/how-do-ai-content-detectors-work/

34. How Do AI Detectors Work? Key Methods, Accuracy, and Limitations - Grammarly, accessed July 1, 2025, https://www.grammarly.com/blog/ai/how-do-ai-detectors-work/

35. How Do Perplexity and Burstiness Make AI Text Undetectable ..., accessed July 1, 2025, https://www.stealthgpt.ai/blog/how-do-perplexity-and-burstiness-make-ai-text-undetectable

36. How To Identify Whether A Content Is AI Generated Or Not | Perplexity - YouTube, accessed July 1, 2025, https://www.youtube.com/watch?v=O2CC5E4BrJY

37. Detecting AI Generated Text Based on NLP and Machine Learning Approaches - arXiv, accessed July 1, 2025, https://arxiv.org/abs/2404.10032

38. [2405.16422] AI-Generated Text Detection and Classification Based on BERT Deep Learning Algorithm - arXiv, accessed July 1, 2025, https://arxiv.org/abs/2405.16422

39. arxiv.org, accessed July 1, 2025, https://arxiv.org/html/2405.10129v1

40. StyloAI: Distinguishing AI-Generated Content with Stylometric Analysis | Request PDF, accessed July 1, 2025, https://www.researchgate.net/publication/381897231_StyloAI_Distinguishing_AI-Generated_Content_with_Stylometric_Analysis

41. AI or Human: Watermarking LLM-Generated Text | xLab | Case Western Reserve University, accessed July 1, 2025, https://case.edu/weatherhead/xlab/about/news/ai-or-human-watermarking-llm-generated-text

42. Watermarked LLMs Offer Benefits, but Leading Strategies Come With Tradeoffs, accessed July 1, 2025, https://csd.cmu.edu/news/watermarked-llms-offer-benefits-but-leading-strategies-come-with-tradeoffs

43. Digital Watermarks Are Not Ready for Large Language Models - Lawfare, accessed July 1, 2025, https://www.lawfaremedia.org/article/digital-watermarks-are-not-ready-for-large-language-models

44. Understanding multimodal interview: a game changer for tech hiring - BytePlus, accessed July 1, 2025, https://www.byteplus.com/en/topic/453075

45. Multimodal AI: The Next Evolution in Artificial Intelligence - Guru, accessed July 1, 2025, https://www.getguru.com/reference/multimodal-ai

46. New AI Verification Tech Detects Fake Job Applicants - Success Magazine, accessed July 1, 2025, https://www.success.com/ai-verification-fake-job-applicants/

47. Fake Candidates: How to Identify and Block Them, accessed July 1, 2025, https://info.recruitics.com/blog/fake-candidates

48. Employment Fraud: How Socure Stops Fake Job Applicants, accessed July 1, 2025, https://www.socure.com/blog/hiring-the-enemy-employment-fraud

49. Candidate Fraud: Your complete - BrightHire, accessed July 1, 2025, https://brighthire.com/candidate-fraud/

50. AI for Fraud Detection & Candidate Checks in Hiring - Codica, accessed July 1, 2025, https://www.codica.com/blog/how-to-use-ai-for-fraud-detection-and-candidate-verification-in-hiring-platforms/

51. How to Protect Your Company From Candidate Fraud - MBO Partners, accessed July 1, 2025, https://www.mbopartners.com/blog/independent-workforce-trends/protect-your-hiring-process-from-candidate-fraud/

52. Recruiter's Guide to Identifying Candidate Fraud, accessed July 1, 2025, https://vidcruiter.com/interview/intelligence/candidate-fraud/

53. Combating Hiring Deception: How Recruiters Spot Candidate Fraud - Abel Personnel, accessed July 1, 2025, https://www.abelpersonnel.com/combating-hiring-deception-how-recruiters-spot-candidate-fraud/

54. How to handle a candidate's inappropriate use of 'AI' during an interview?, accessed July 1, 2025, https://workplace.stackexchange.com/questions/201937/how-to-handle-a-candidates-inappropriate-use-of-ai-during-an-interview

55. AI Candidate Screening: How it is Revolutionizing Hiring? - Xobin, accessed July 1, 2025, https://xobin.com/blog/ai-candidate-screening/

56. Here's how recruiters are actually using AI in hiring | ISE, accessed July 1, 2025, https://ise.org.uk/knowledge/insights/387/heres_how_recruiters_are_actually_using_ai_in_hiring/

57. How Innovative Technology Can Solve Damaging Recruitment Frauds - RippleHire, accessed July 1, 2025, https://www.ripplehire.com/how-ai-can-solve-damaging-recruitment-frauds/

58. Human Oversight in AI Hiring: Why It Matters - Ribbon.ai, accessed July 1, 2025, https://www.ribbon.ai/blog/human-oversight-in-ai-hiring-why-it-matters

59. New for 2025: AI Recruiter Masterclass | RecruiterDNA - YouTube, accessed July 1, 2025, https://www.youtube.com/watch?v=AV7O79R8DFA

60. Multimodal AI: Transforming Evaluation & Monitoring - Galileo AI, accessed July 1, 2025, https://galileo.ai/blog/multimodal-ai-guide

61. Instructor Text Embedding, accessed July 1, 2025, https://instructor-embedding.github.io/

62. xlang-ai/instructor-embedding: [ACL 2023] One Embedder, Any Task: Instruction-Finetuned Text Embeddings - GitHub, accessed July 1, 2025, https://github.com/xlang-ai/instructor-embedding

63. Tune text embeddings | Generative AI on Vertex AI - Google Cloud, accessed July 1, 2025, https://cloud.google.com/vertex-ai/generative-ai/docs/models/tune-embeddings

64. Instruction Tuning for Large Language Models: A Survey - arXiv, accessed July 1, 2025, https://arxiv.org/html/2308.10792v5

65. What Is Instruction Tuning? | IBM, accessed July 1, 2025, https://www.ibm.com/think/topics/instruction-tuning

66. (PDF) Watermarking Large Language Models and the Generated Content: Opportunities and Challenges - ResearchGate, accessed July 1, 2025, https://www.researchgate.net/publication/385291496_Watermarking_Large_Language_Models_and_the_Generated_Content_Opportunities_and_Challenges